You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Absurdism corner

- Thread starter Bosbeetle

- Start date

WizardStan

Mega GP Mania

- Joined

- May 24, 2008

- Messages

- 16,733

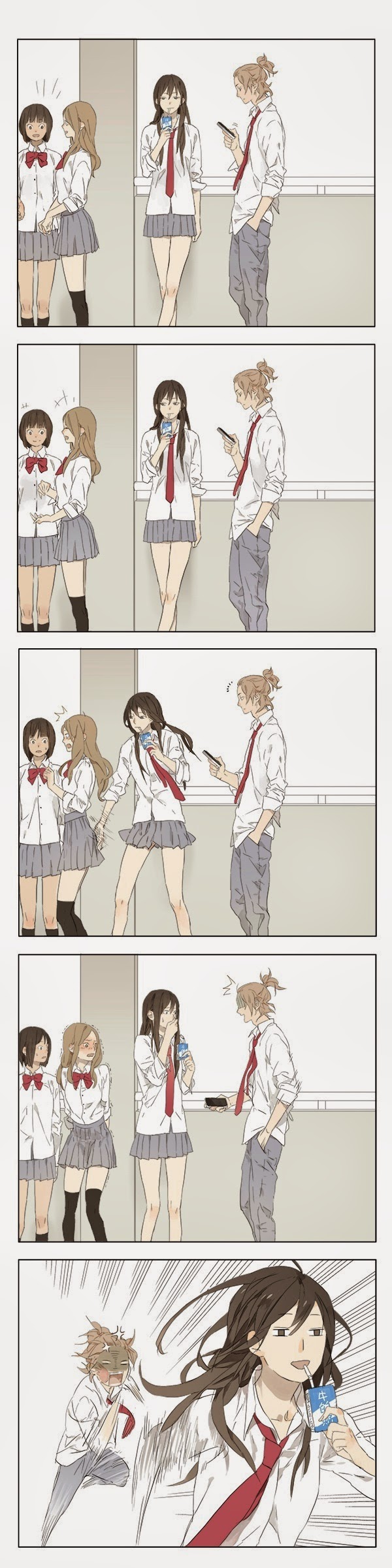

Looking for the part of the post where something has "gone wrong". Can't see any problems.

ElPoco

Hardcore Member

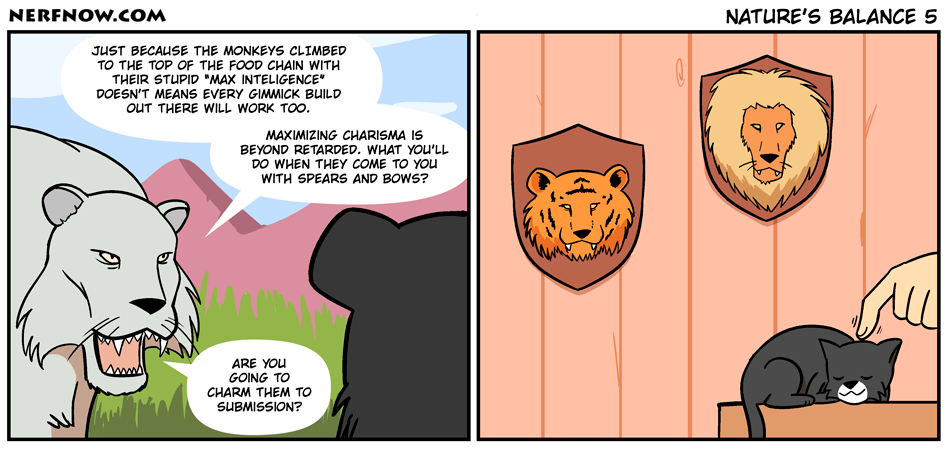

The cat's aren't neutered

The young lad/lass up there looks like a quick learner. I know that with that sort of early-years education, we can prevent children becoming IoT developers.

Talk to your kids about web security - before someone else does.

Talk to your kids about web security - before someone else does.

ClockworkCoder

Chaotic Neutral

jparish1977

Member

- Joined

- Oct 1, 2016

- Messages

- 89

- Age

- 48

Is it the pseudo url, floating point math, alert dialog, or firefox that you find the most absurd? (Now I have to remember which machine I have firefox on to see if it does something unexpected)put that into your URL (if you have FireFox)HTML:javascript:var a=function(){alert(0.1*0.2); }()

Sent from my SM-N950U using Tapatalk

put that into your URL (if you have FireFox)HTML:javascript:var a=function(){alert(0.1*0.2); }()

Iceweasel 38.5 here. Nothing happens.

I'm curious to know what you'd expect to happen. Presumably something absurd?

Djoga'Ro

moonstruck

- Joined

- Apr 3, 2016

- Messages

- 2,628

Pale Moon 28.5.0 here. Nothing happens.put that into your URL (if you have FireFox)HTML:javascript:var a=function(){alert(0.1*0.2); }()

[doublepost=1558171230,1558171131][/doublepost]Maybe absurd are we, trying it, expecting something to happen and getting confused. ?

jparish1977

Member

- Joined

- Oct 1, 2016

- Messages

- 89

- Age

- 48

I assume the expectation is an alert dialog displaying a slightly inaccurate value on browsers that support javascript pseudo urls

Sent from my SM-N950U using Tapatalk

Sent from my SM-N950U using Tapatalk

WizardStan

Mega GP Mania

- Joined

- May 24, 2008

- Messages

- 16,733

What is "not that good" about it? Do the same thing in C and you get 0.02000000141561031341552734375. That's just the nature of floating point numbers in a computer, you can't represent an infinite precision decimal, you can only represent "close enough", and if you'll notice, the JavaScript output is actually closer to what you'd expect than the C. Or is that the "not that good"? That it produces a different result than a true IEEE754 representation should?

levi

Still fresh, damnit!

You mean an infinite precision binary number, presumably. In decimal, the answer is 0.02 exactly, or 1/50 (because you're calculating 1/5 x 1/10). In binary that's slightly more than 0.00000001 (1/64), presumably an infinite precision binary, and when you try to store that in floating point, you effectively try to shift the decimal until the number becomes an integer; all of the digits are to the left, but with an infinite precision or simply too precise number that's simply not possible so it truncates it at its maximum resolution,

You could argue that this would be better if it used a decimal mantissa, so that it stored 2x10^-2 exactly, but in terms of the transistors and things inside a chip, when you have to divide by 10 it's relatively a killer. Dividing by 2 is simply a matter of shifting the bits one stop to the right and discarding the carry (also known as an ASR; an arithmetic shift right), which is easy to do in terms of transistors and traces. To divide by 10 (110 in binary) you're asking it to do long division every time, so it would be much slower. A slight loss of precision is usually considered preferable; in the javascript you're only out by something like 2.842170943040401e-14% (according to python's limited maths ability)

You could argue that this would be better if it used a decimal mantissa, so that it stored 2x10^-2 exactly, but in terms of the transistors and things inside a chip, when you have to divide by 10 it's relatively a killer. Dividing by 2 is simply a matter of shifting the bits one stop to the right and discarding the carry (also known as an ASR; an arithmetic shift right), which is easy to do in terms of transistors and traces. To divide by 10 (110 in binary) you're asking it to do long division every time, so it would be much slower. A slight loss of precision is usually considered preferable; in the javascript you're only out by something like 2.842170943040401e-14% (according to python's limited maths ability)

levi

Still fresh, damnit!

It's Jake's mate with the extra hand!

How wonderful it would be to have some more responsible engineers (and engineering managers) in society.

If the customer isn't sure, then engineering companies should take pride in selling them what they need, not in pulling funfair-grade chicanery on them for a petty increase in share value!

If the customer isn't sure, then engineering companies should take pride in selling them what they need, not in pulling funfair-grade chicanery on them for a petty increase in share value!

Similar threads

- Replies

- 29

- Views

- 7K