bsp

Very Active Member

- Joined

- Dec 2, 2006

- Messages

- 314

but using assumptions of linear scaling in clock speed

this was not an assumption. I actually changed the clock speed and the performance scaled exactly linearly.

The results were posted in the c64_tools thread (or try for yourself if you do not believe me).

based on one very synthetic benchmark

I never claimed that this was not a synthetic benchmark. I repeatedly stated that more (i.e. different) benchmarks need to be done.

I don't see a branch in the inner loop like you do

I left the compiler generated C64p .asm source in the .tar.gz so you can see for yourself although it can be seen in the source code, too.

Usually a graphics renderloop consists of the mandatory for(y..) and for(x..) loops but the fractal benchmark requires an additional loop and such a thing requires a branch (surprise, surprise). In fact, up to 24 branches (the default maximum iteration depth) are executed per pixel.

It doesn't help that the measurements weren't done by the same person to ensure all the variables were correct

totally agree. I had not gotten around to integrating M-HT's optimized code and I just did that.

First of all, he turned off the graphics output / video memory writes. That was not a good idea since then he would have seen that his version of the benchmark rendered only half of the screen! (to be exact: the bottom half was almost constant color and needed very few iterations per pixel)

It was a simple mistake and easily fixed (_FP(-1.8f) instead of just -1.8f).

A quick glance at the source code also revealed a huge (and missed) optimization opportunity:

A C compiler will not generate an arithmetic right shift when an integer is divided by 65536 !

Fixed that (replaced the division with the shift) and the performance improved by 44%.

(the precision difference is negligible).

you mean like some kind of "movetable effect", e.g. the classic 90ies Winamp visualizations ?like loading a value from a LUT then using that value to load into another LUT. That's the kind of thing that's really going to hurt the DSP, not this

I have to dig in my old source backups..back then I once wrote an implementation of that and a friend of mine created some nice patterns.

I'll add this to the benchmarks/demos and I am very curious to see if that really brings the DSP down to its knees. These kind of table lookups are definitely not too uncommon.

bollocks, sry. All test parameters are passed via a struct, it's not constants that the compiler can optimize. Plus the main parameters (c1, c2) change each frame, that's why some frames render a lot faster than others. Just the screen size and the maximum iteration depth are constant but even those are passed via the struct.btw, using only one particular input set makes this test even more ridiculously synthetic

I have to admit that I did not consider that. The fixed point version does indeed use less power (~200mW) than the float version compiled with -mfpu=neon (or -mfpu=vfp, does not make a difference).not to mention the assumption that his fixed-point ARM code would use the same amount of power as your VFP-based ARM code

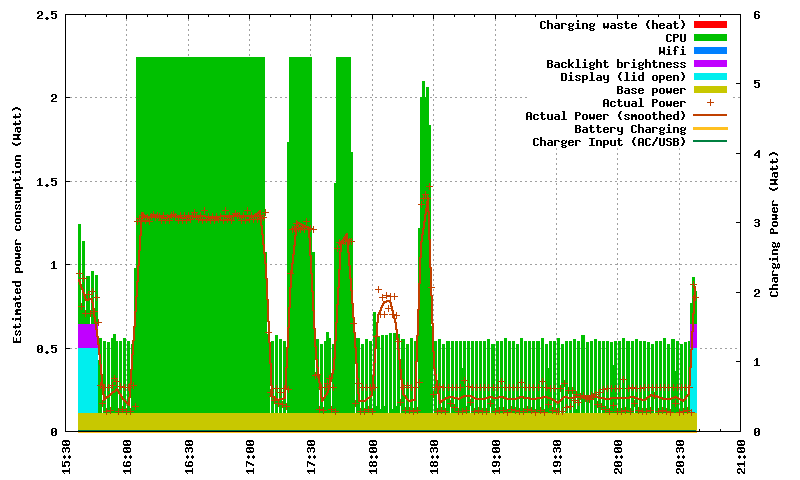

I reran the "stress_test.sh" script: The ARM-only float version uses ~1.8 W, the fixed point ARM-only version of the benchmark ~1.6 W, and the DSP-only version ~1.1 W.

I attached the updated source code so you can see for yourself. Except for the mistake mentioned above he did a good job at converting the source to fixed point (maybe he used the DSP variant and just replaced the macro implementations? I did not compare the sources, does not really matter anyway).I'm really baffled as to how M-HT didn't get better performance than he did. But without knowing details of precision it's hard to say

If I did a faster version than you did would you really turn around then and say the Cortex-A8 is faster than the DSP?

I would say that for rendering this (and similar) effects, the Cortex-A8 is faster.

In fact, after optimizing the code as described above, it turns out that the GPP _is_ faster in this case:

s: fractal_benchmark-09Oct2013.txt

GPP only: (float, -mfpu=vfp)

[...] 3600 iterations in 65343 millisecs.

DSP only: (fixpoint via iqmath)

[...] 3600 iterations in 10841 millisecs.

GPP only: (fixpoint, original _FPmpy)

[...] 3600 iterations in 11054 millisecs.

GPP only: (fixpoint, optimized _FPmpy)

[...] 3600 iterations in 7652 millisecs.

GPP+DSP:

[...] 3600 iterations in 5054 millisecs.

This is what had initially anticipated and why I considered the benchmark a worst/bad case scenario for the DSP -- it hates branches.

Just so that you understand we are on the same page here: My statement on the previous page was sarcastic and exaggerated but it is, from what I have seen so far, not very far from the truth. In the c64_tools thread I already said that more benchmarks need to be done to come to a final conclusion.

The original purpose of this exercise - as I already said - was to see how a piece of source code run on the DSP would stack up against the same one run on the GPP.

I assumed that when people consider using the DSP, they do not want to learn too much about it and just write plain C code and use whatever libraries are readily available.

Sure, the algorithm itself could be optimized (always the best kind of optimization) and your suggestions sound reasonable but the same optimizations could be done on the DSP side.

Let's just leave it at that and focus our energy on creating new benchmarks or working on real applications.

devs _are_ lazy. That's another thing I already said and I included myself.You did this before.. starting with "it's faster and it's more power efficient" but then adding a caveat "but it's more work to use." That doesn't reduce hype, that just makes people who won't program this stuff themselves call devs lazy for not doing it.

hey, if every last bit of software were optimized to the fullest, we would not need to buy new hardware every few years to make up for "sloppy" coding, would we ? (by that I mean doing the same tasks with newer software versions on newer HW at the same speed as with older SW versions on older hardware, of course)

I do not expect everyone to suddenly become DSP assembly / optimization experts. Personally I would use some intrinsics for some small loops but generally stick to plain "C".

There are many other platforms out there which do not have a DSP core but maybe one or three additional GPP cores.

It makes sense to optimize software for that, if processing power is the bottleneck.

The DSP should be seen as just another core which is simply not as tightly integrated (e.g. in the dev. toolchain (compiler/debugger)) as a regular, additional GPP core would be. Once ppl start writing software for the DSP, they will realize that this is not _that_ much different from using multi-threading.

It should be fairly easy to port a c64_tools DSP component to another multi-core architecture. I already mentioned that I am thinking about writing a version of c64_tools that can be used on standard PCs. The DSP would simply be replaced by a thread. Could be useful for development purposes (I guess not everyone writes/compiles code directly on the Pandora).

Attachments

Last edited by a moderator: