Wouldn't LGPL for a decoder library be OK? LGPL is significantly less viral than GPL, so afaik even proprietary browsers could use it (they can just not modify the library itself then without releasing the code of those modifications, etc).Looks and sounds really awesome what you've achieved. Kudos!

I don't know what you plan long-time with this file format, but IF it should be adopted widely, i think staying with (L)GPL will be a problem. I see it totally useful for browsers, but for example chrome/blink/whatever you call it has AFAIK only dependencies to non-GPL code, also Firefox is MPL (though GPL compatible what i read)... so at least, it would be great to have some BSD style licensed decoder library available. but these are just my 2cents. Also for games, i think that format could be used, that you can display a low-resolution version of the image quite fast and then start updating the textures while the game is already running, steadily increasing the resolution of the texture...

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Free Lossless Image Format (FLIF)

- Thread starter _wb_

- Start date

LGPL does not fit ecosystems that want to add their own (incompatible) conditions to the whole system. E.g. the Eclipse (IDE) project did (at least at some time in the past) not accept LGPL code. Depending on your POV that is either a weakness or a strength of copyleft.

Exophase

Nothing good will ever come of Exophase.

I don't see any issues with using LGPL for something like this.Wouldn't LGPL for a decoder library be OK? LGPL is significantly less viral than GPL, so afaik even proprietary browsers could use it (they can just not modify the library itself then without releasing the code of those modifications, etc).

You may however find some resistance from some (probably not many) GPL advocates. As per this: http://www.gnu.org/licenses/why-not-lgpl.en.html

I'm currently running some benchmarks so I can draw a plot like this one Google made to show how good WebP is.

I'm using the following corpus of images to test -- I hope this is somewhat representative, and if anyone has more images I can use for testing, feel free to point me to them!

I will be including the following formats in my comparison:

Results will be ready soonish, I'll post them here.

I'm using the following corpus of images to test -- I hope this is somewhat representative, and if anyone has more images I can use for testing, feel free to point me to them!

- 20 medical images: Lukas 2D 8 Bit Medical Image Corpus - A set of two dimensional 8 bit radiographs (http://www.data-compression.info/Corpora/LukasCorpus/)

- 20 photos: Kodak Lossless True Color Image Suite (http://r0k.us/graphics/kodak/)

- 5 images with transparency: taken from the Google WebP gallery: https://developers.google.com/speed/webp/gallery2

- 43 misc images from the SIPI Image Database: http://sipi.usc.edu/database/database.php?volume=misc (I left out image 7.2.01 because ImageMagick convert did something weird with it)

- 20 images with sparse colors, picked by Niels Fröhling (can't find the link anymore)

- some images I hand-picked: 7 "brochure"-like images (mix of text and photo), 5 cartoon/clipart images, 19 images of geographical maps, 6 screenshots, 55 random images with transparency from pngimg.com

I will be including the following formats in my comparison:

- FLIF with progressive decoding (2D interlacing) enabled, like in the video

- FLIF without progressive decoding, just scanlines

- PNG as produced by ImageMagick convert -interlace PNG (this produces Adam7 progressive decoding PNGs)

- PNG as produced by ImageMagick convert -quality 95 (this produces relatively small PNGs relatively fast)

- PNG with Adam7, made as small as possible with pngcrush -brute

- PNG without Adam7, made as small as possible with pngcrush -brute followed by pngout

- lossless JPEG2000, as produced by ImagMagick convert foo.png -quality 100 foo.jp2

- lossless WebP, as produced by cwebp 0.4.3

- lossless BPG, as produced by BPG 0.9.5

Results will be ready soonish, I'll post them here.

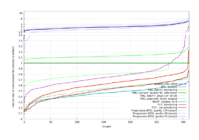

Here are the results of my compression benchmark:

As you can see, FLIF beats any other lossless image format in the test.

You can also clearly see how much JPEG and JPEG 2000 suck on non-photographic images. Just look at the right hand side (the images they don't handle well), and take into account that the top part of this plot has a logarithmic y-axis!

FLIF even beats lossy JPEG (at high quality). At low enough quality JPEG beats FLIF on half of the corpus, but on the other half, JPEG is so crappy that overall, FLIF wins easily. And that's even though JPEG has no alpha support, so for the images that have an alpha channel, it's not even encoding that!

I'm quite happy with these results. They are very promising.

In terms of encode/decode speed: both are slow and not very optimized at the moment (no assembler code etc, just C++ code). A median file took 3 seconds to encode (1 second for a p25 file, 6 seconds for a p75 file), which is slower than most other algorithms: WebP took slightly less than a second for a median file (0.5s for p25, 2s for p75), PNG and JPEG2000 took about half a second. It's not that bad though: BPG took 9 seconds on a median file (2.5s for p25, 25s for p75), and brute-force pngcrushing took something like 15 seconds on a median file (6s for p25, over 30s for p75), so at least it's already better than that.

Decode speed to restore the full lossless image and write it as a png is not so good: about 0.75s for a median file, 0.25s for a p25 file, 1.5s for a p75 file. That's roughly 3 to 5 times slower than the other algorithms. However, decoding a partial (lossy) file is much faster than decoding everything, so in a progressive decoding scenario, the difference would not be huge.

There's still room for optimizing the encode/decode speed, but it's not very useful to do that before the bitstream is somewhat finalized. The prototype implementation I have now is not extremely fast, but at least it's in the right ballpark. Most likely even an optimized decoder will still be slower than an optimized PNG decoder, but the difference will be small enough to not matter much (certainly compared to the time won by downloading less bytes).

As you can see, FLIF beats any other lossless image format in the test.

You can also clearly see how much JPEG and JPEG 2000 suck on non-photographic images. Just look at the right hand side (the images they don't handle well), and take into account that the top part of this plot has a logarithmic y-axis!

FLIF even beats lossy JPEG (at high quality). At low enough quality JPEG beats FLIF on half of the corpus, but on the other half, JPEG is so crappy that overall, FLIF wins easily. And that's even though JPEG has no alpha support, so for the images that have an alpha channel, it's not even encoding that!

I'm quite happy with these results. They are very promising.

In terms of encode/decode speed: both are slow and not very optimized at the moment (no assembler code etc, just C++ code). A median file took 3 seconds to encode (1 second for a p25 file, 6 seconds for a p75 file), which is slower than most other algorithms: WebP took slightly less than a second for a median file (0.5s for p25, 2s for p75), PNG and JPEG2000 took about half a second. It's not that bad though: BPG took 9 seconds on a median file (2.5s for p25, 25s for p75), and brute-force pngcrushing took something like 15 seconds on a median file (6s for p25, over 30s for p75), so at least it's already better than that.

Decode speed to restore the full lossless image and write it as a png is not so good: about 0.75s for a median file, 0.25s for a p25 file, 1.5s for a p75 file. That's roughly 3 to 5 times slower than the other algorithms. However, decoding a partial (lossy) file is much faster than decoding everything, so in a progressive decoding scenario, the difference would not be huge.

There's still room for optimizing the encode/decode speed, but it's not very useful to do that before the bitstream is somewhat finalized. The prototype implementation I have now is not extremely fast, but at least it's in the right ballpark. Most likely even an optimized decoder will still be slower than an optimized PNG decoder, but the difference will be small enough to not matter much (certainly compared to the time won by downloading less bytes).

levi

Still fresh, damnit!

What does your X axis refer to exactly? 'Images' - is that number of images. If so, does that suggest that after 200 images, 200 flif images take up more space than 200 PNGs?

pmprog

DNF (Did Not Finish)

- Joined

- Apr 25, 2011

- Messages

- 4,150

I wondered this, but if you read above, he's got 200 images - I assume each "one" on the X is a different picture. Pictures will vary in size and complexity, thus giving the differing levels of compression.What does your X axis refer to exactly? 'Images' - is that number of images. If so, does that suggest that after 200 images, 200 flif images take up more space than 200 PNGs?

I think that's right

No, it's not cumulative, it's just sorted from best relative compression to worst relative compression. So if you look at the middle of the X-axis, you get the relative file size of a 'typical' file. At the far right you see the worst-case behavior, at the far left you see the best-case behavior.What does your X axis refer to exactly? 'Images' - is that number of images. If so, does that suggest that after 200 images, 200 flif images take up more space than 200 PNGs?

In other words, in the worst case, FLIF outputs a file that is 15% larger than the corresponding PNG file. That only happens on a couple of files though. A typical FLIF file would be 35% smaller than the corresponding PNG file. In the best case you can trim away 80 or 90% or so.

But those extreme cases (best and worst) are probably not that interesting. It's probably the middle segment that is most representative.

I threw my current prototype on github:

https://github.com/jonsneyers/FLIF

WARNING: this is a rough prototype (e.g. command line argument parsing is a joke, and so is error handling), and the format is not finalized yet, so there is no reason whatsoever to think that files encoded with this version of the program, will decode with future versions of it. So use it at your own risk!

slaeshjag

¯\_(ツ)_/¯

How does it handle small images? Say, in the 16x16 -> 256x256 range? Are those included in your samples?

Most certainly not: the total size is more like the area under the line (but that's assuming that all original files have the same size, which is not true).does that suggest that after 200 images, 200 flif images take up more space than 200 PNGs?

Looking at total filesizes, here are some results (these are the "normal" PNGs, the pngcrushed ones would be a bit smaller but I didn't store those and it takes ages to recompute those):

- Niels Fröhling's 20 sparse images: 9,347,698 bytes for the PNGs, 6,039,242 for FLIF (interlacing), 5,595,095 for FLIF (non-interlacing)

- Kodak corpus (20 photos): 13,213,035 bytes for the PNGs, 8,644,410 for FLIF (interlacing), 8,809,599 for FLIF (non-interlacing)

- 55 somewhat random transparent images: 6,487,553 for the PNGs, 3,116,096 for FLIF (interlacing), 3,014,889 for FLIF (non-interlacing)

- medical images (20 xrays etc) : 22,439,286 for the PNGs, 13,346,196 for FLIF (interlacing), 13,124,689 for FLIF (non- interlacing)

- maps (19 images, typically using a small color palette) : 4,194,243 for the PNGs, 2,898,795 for FLIF (interlacing), 2,448,978 for FLIF (non-interlacing)

TBH, really, I'm totally not an expert when it comes to licensing, so LGPL is fine in that regards, but I usually can't use either GPL nor LGPL licensed code. Let's say you want to use it on iOS, dynamic linking is not even possible/allowed, so games or apps that could use your file format greatly in a mobile environment are ruled out from the very beginning.Wouldn't LGPL for a decoder library be OK? LGPL is significantly less viral than GPL, so afaik even proprietary browsers could use it (they can just not modify the library itself then without releasing the code of those modifications, etc).

But I don't want to hold you back from your great invention with licensing discussions, I just wanted to give some input on that side... because I think (I really dont know) the wide adoption of PNG comes from its license: if you want to load images in an app, i think most of the developers will choose libpng - because it's a no-brainer, you just know you won't have any problems with licensing.

Last edited by a moderator:

Good question. No, the images I tried are larger, at least 256x256 but usually more like a screenfull or something.How does it handle small images? Say, in the 16x16 -> 256x256 range? Are those included in your samples?

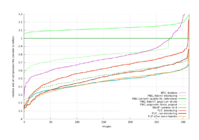

Here are some results for small images.

I took this set of svg icons: https://github.com/icons8/flat-color-icons

Then I used "convert foo.svg -depth 8 foo.png" to render those icons. It produced 312 PNG files of size 48x48.

This was the result of running the benchmark on those small images: (the advantage of small images is that running benchmarks on them does not take much time

Since interlacing does not make much sense on such small images, I should probably add a simple heuristic that lets FLIF disable interlacing by default on small images and enable it by default on large images.

As you can see, FLIF performs about the same as PNG when the PNG is crunched as far as possible.

WebP performs slightly better. JPEG sucks as expected.

I could probably shave off quite some bytes by encoding the palette more efficiently. These small images tend to have a small number of colors so their colors get palette indexed. I didn't spend much time optimizing the outputting of the color palette itself, and on such small images the palette itself (basically a list of N colors) contributes quite a bit to the overall file size. With some tweaking I think it should be possible to beat WebP. That would be a nice challenge on this set of images.

fusion_power

Advanced Member

Remembers me onto when I worked in an Hospital IT department. There were High Res greyscale X-Ray images with up to 1024 greyscales. They had special Monitors to display these (surprisingly, normal Monitors can't do 1024 greyscales ). So to really see how good Image Quality is, you should try to get some digital X-Ray images for your tests.20 medical images: Lukas 2D 8 Bit Medical Image Corpus - A set of two dimensional 8 bit radiographs

Last edited by a moderator:

Kev2442

Still French

It seems that FLIF is already implementing some kind of bilinear mipmapping, isn't it ?

Could this be modified into trilinear ? I can see a lot of possibilities regarding video games...

You asked about Pro Res, well it's an Apple-branded codec using 4:4:2 compression in JPEG, so it's only spatial compression. This is mostly used in semi-pro masters and Final Cut converts into it for video editing.

Could this be modified into trilinear ? I can see a lot of possibilities regarding video games...

You asked about Pro Res, well it's an Apple-branded codec using 4:4:2 compression in JPEG, so it's only spatial compression. This is mostly used in semi-pro masters and Final Cut converts into it for video editing.

What was the point of posting this in a thread where the only (repeated) license request is to not use copyleft at all? Incidentally that is also my experience from maintaining a LGPL library a couple of years ago. The only licensing request was to relicense more permissively (the above mentioned inclusion within Eclipse). Nobody ever suggested GPL. But if FLIF lives up to its promise, it would fit the linked article perfectly.You may however find some resistance from some (probably not many) GPL advocates. As per this: http://www.gnu.org/licenses/why-not-lgpl.en.html

Remembers me onto when I worked in an Hospital IT department. There were High Res greyscale X-Ray images with up to 1024 greyscales. They had special Monitors to display these (surprisingly, normal Monitors can't do 1024 greyscales ). So to really see how good Image Quality is, you should try to get some digital X-Ray images for your tests.20 medical images: Lukas 2D 8 Bit Medical Image Corpus - A set of two dimensional 8 bit radiographs

At the moment the encoder accepts only 8 bit RGBA input (which implies 2^8 = 256 shades of grey), but everything in the codec is generic in terms of bit-depth, so it can easily be generalized to 10, 12 or 16 bits per color.

I did some tweaks to the outputting of the color palette -- it shaves off something like 100 bytes on a 250 color palette, which is not a lot but it makes quite a bit of a difference in relative terms for such small images.I could probably shave off quite some bytes by encoding the palette more efficiently. These small images tend to have a small number of colors so their colors get palette indexed. I didn't spend much time optimizing the outputting of the color palette itself, and on such small images the palette itself (basically a list of N colors) contributes quite a bit to the overall file size. With some tweaking I think it should be possible to beat WebP. That would be a nice challenge on this set of images.

I didn't quite beat WebP yet on these small 48x48 images, but I more or less match its performance now:

Thanks, slaeshjag, for your question which lead me to look at this particular type of images.

Linux-SWAT

Forum Addict!

- Joined

- Feb 13, 2010

- Messages

- 9,290

I don't know the matter so it may be a bad question, but does it handle raw images ? I have some from a shooting and they are incredibly large.

ible

professional vim user

i can verify compiling on lubuntu 15.04 after installing libpng++-dev (though perhaps one of its dependencies was the requirement).

the usage message could be a bit more helpful. i've tried to convert to flif files:

flif -d 1 big.png big.flif

returns something like "Unknown extension to write to: .flif", and if i try something like this:

flif -d 1 big.png

I get a segfault .

.

soon we just need to make our GPL web browser which supports it and intelligently supports the 1000s of tabs that this community opens

the usage message could be a bit more helpful. i've tried to convert to flif files:

flif -d 1 big.png big.flif

returns something like "Unknown extension to write to: .flif", and if i try something like this:

flif -d 1 big.png

I get a segfault

soon we just need to make our GPL web browser which supports it and intelligently supports the 1000s of tabs that this community opens

Compressing:i can verify compiling on lubuntu 15.04 after installing libpng++-dev (though perhaps one of its dependencies was the requirement).

the usage message could be a bit more helpful. i've tried to convert to flif files:

flif -d 1 big.png big.flif

returns something like "Unknown extension to write to: .flif", and if i try something like this:

flif -d 1 big.png

I get a segfault.

soon we just need to make our GPL web browser which supports it and intelligently supports the 1000s of tabs that this community opens

flif input.png output.flif

Decompressing:

flif -d input.flif output.png

You can also use pnm/ppm/pgm instead of png (but that format does not have alpha).

You can corrupt a file by cutting it, and it should still decompress, e.g. take the first 10 kilobytes:

dd if=foo.flif of=lossyfoo.flif bs=1024 count=10

I'll make the help message and argument handling better, this version isn't really meant to be used by anyone yet

ible

professional vim user

great! that would be an excellent addition to your readme

so i have a jpg file about 6.3M, which converts into a png at 14M or ppm at 35M. the resulting flif is 8.6M. (PLT fails, ACB fails, if that means anything, and interestingly the output flifs from either ppm or png are identical. though if i convert the flif back to a png "the binary files [.png's] differ", though i can't see the difference.)

if i chop up the file as you mention using dd, i start seeing the upper part of the picture at about 2M (the lower half is an impressionistic painting of TV static), at about 4M i get the full picture but with weird colors. colors are a bit messy even up to 7.9M, though only in the lower half where it looks a bit glitchy.

let me know if the above results are strange (the flif is larger than the original jpg), as i could e-mail you the pic.

so i have a jpg file about 6.3M, which converts into a png at 14M or ppm at 35M. the resulting flif is 8.6M. (PLT fails, ACB fails, if that means anything, and interestingly the output flifs from either ppm or png are identical. though if i convert the flif back to a png "the binary files [.png's] differ", though i can't see the difference.)

if i chop up the file as you mention using dd, i start seeing the upper part of the picture at about 2M (the lower half is an impressionistic painting of TV static), at about 4M i get the full picture but with weird colors. colors are a bit messy even up to 7.9M, though only in the lower half where it looks a bit glitchy.

let me know if the above results are strange (the flif is larger than the original jpg), as i could e-mail you the pic.

Last edited by a moderator: