ptitSeb

Serial Porter

Here is the test (source + executable, with makefile, should compile with codeblocks or cdevtools, plain C, nothing fancy).

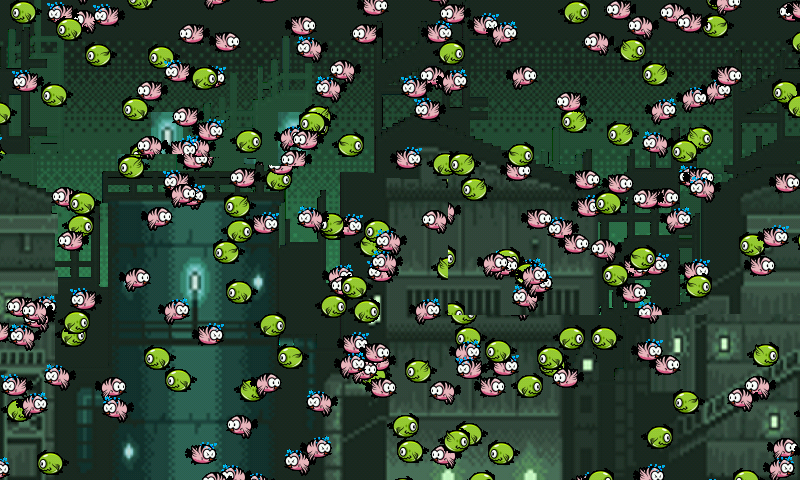

I load 4 larges texture for parallax background, and I build a small atlas for the sprites. I have 512 sprite on screen (but you can easily change the number in the code), and print out the number of SDL ticks needed to draw 300 frames.

I use eglport for the GLES context

Those nice textures come from OpenGameArts, flying things by bevouliin and the parallax background by Luis Zuno.

Very simple test (and not much comment in the crappy code), using GLES 1 only. It's a 2D scenario, and the drawing is a simple back to front, with no Depth test.

On my gigahertz, using Blend is 10% faster than Alpha.

I load 4 larges texture for parallax background, and I build a small atlas for the sprites. I have 512 sprite on screen (but you can easily change the number in the code), and print out the number of SDL ticks needed to draw 300 frames.

I use eglport for the GLES context

Those nice textures come from OpenGameArts, flying things by bevouliin and the parallax background by Luis Zuno.

Very simple test (and not much comment in the crappy code), using GLES 1 only. It's a 2D scenario, and the drawing is a simple back to front, with no Depth test.

On my gigahertz, using Blend is 10% faster than Alpha.

Attachments

Last edited by a moderator: