Bosbeetle

Terminally lost

So yesterday google claimed quantum supremacy.

There are two nice artikles about it in this weeks nature

www.nature.com

www.nature.com

It seems like a big milestone, but on the other hand it also seems like a not really programmed piece of code excution but rather a measurment of some properties of the system itself. Surely this will become handy for theoretical physics and might be nice starting point. The analogy with the wright brothers seems to fit.

To end in the words of the google article

"As a result of these developments, quantum computing is transitioning from a research topic to a technology that unlocks new computational capabilities. We are only one creative algorithm away from valuable near-term applications."

*claim by google IBM says something about days

There are two nice artikles about it in this weeks nature

Quantum supremacy using a programmable superconducting processor - Nature

Quantum supremacy is demonstrated using a programmable superconducting processor known as Sycamore, taking approximately 200 seconds to sample one instance of a quantum circuit a million times, which would take a state-of-the-art supercomputer around ten thousand years to compute.

So if I am correct they made a chip with 53 interconnected qbits (6x9 one failed) and measurde some pseudo-random quantum effect (a bit like noise seen in laser scattering) that would take a regular computer 10000 years* in ~200s. They cannot control if the outcome is correct, so to get some confidence they used the same chip not fully interconnected to tackle a problem that is measurable in reasonable time on regular computers. The outcome of those measurement were correct.Quantum computers promise to perform certain tasks much faster than ordinary (classical) computers. In essence, a quantum computer carefully orchestrates quantum effects (superposition, entanglement and interference) to explore a huge computational space and ultimately converge on a solution, or solutions, to a problem. If the numbers of quantum bits (qubits) and operations reach even modest levels, carrying out the same task on a state-of-the-art supercomputer becomes intractable on any reasonable timescale — a regime termed quantum computational supremacy1. However, reaching this regime requires a robust quantum processor, because each additional imperfect operation incessantly chips away at overall performance. It has therefore been questioned whether a sufficiently large quantum computer could ever be controlled in practice. But now, in a paper in Nature, Arute et al.2 report quantum supremacy using a 53-qubit processor.

Read the paper: Quantum supremacy using a programmable superconducting processor

Arute and colleagues chose a task that is related to random-number generation: namely, sampling the output of a pseudo-random quantum circuit. This task is implemented by a sequence of operational cycles, each of which applies operations called gates to every qubit in an n-qubit processor. These operations include randomly selected single-qubit gates and prescribed two-qubit gates. The output is then determined by measuring each qubit.

The resulting strings of 0s and 1s are not uniformly distributed over all 2n possibilities. Instead, they have a preferential, circuit-dependent structure — with certain strings being much more likely than others because of quantum entanglement and quantum interference. Repeating the experiment and sampling a sufficiently large number of these solutions results in a distribution of likely outcomes. Simulating this probability distribution on a classical computer using even today’s leading algorithms becomes exponentially more challenging as the number of qubits and operational cycles is increased.

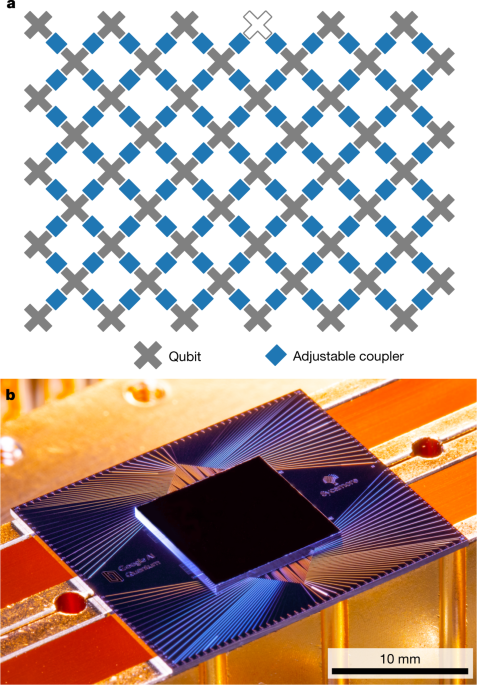

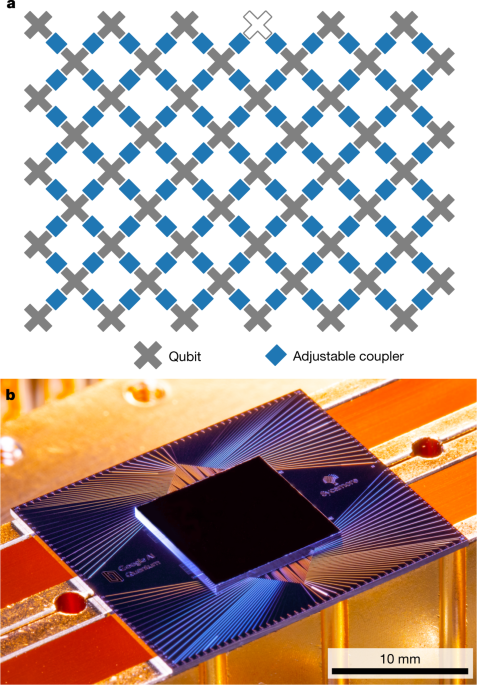

In their experiment, Arute et al. used a quantum processor dubbed Sycamore. This processor comprises 53 individually controllable qubits, 86 couplers (links between qubits) that are used to turn nearest-neighbour two-qubit interactions on or off, and a scheme to measure all of the qubits simultaneously. In addition, the authors used 277 digital-to-analog converter devices to control the processor.

When all the qubits were operated simultaneously, each single-qubit and two-qubit gate had approximately 99–99.9% fidelity — a measure of how similar an actual outcome of an operation is to the ideal outcome. The attainment of such fidelities is one of the remarkable technical achievements that enabled this work. Arute and colleagues determined the fidelities using a protocol known as cross-entropy benchmarking (XEB). This protocol was introduced last year3 and offers certain advantages over other methods for diagnosing systematic and random errors.

Promising ways to encode and manipulate quantum information

The authors’ demonstration of quantum supremacy involved sampling the solutions from a pseudo-random circuit implemented on Sycamore and then comparing these results to simulations performed on several powerful classical computers, including the Summit supercomputer at Oak Ridge National Laboratory in Tennessee (see go.nature.com/35zfbuu). Summit is currently the world’s leading supercomputer, capable of carrying out about 200 million billion operations per second. It comprises roughly 40,000 processor units, each of which contains billions of transistors (electronic switches), and has 250 million gigabytes of storage. Approximately 99% of Summit’s resources were used to perform the classical sampling.

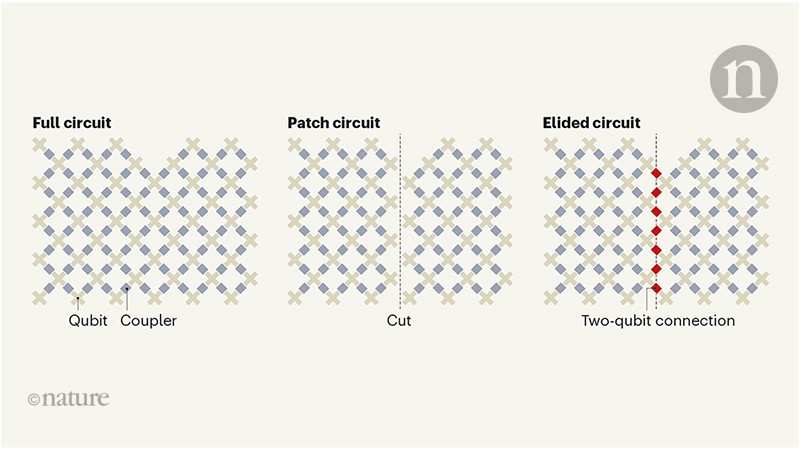

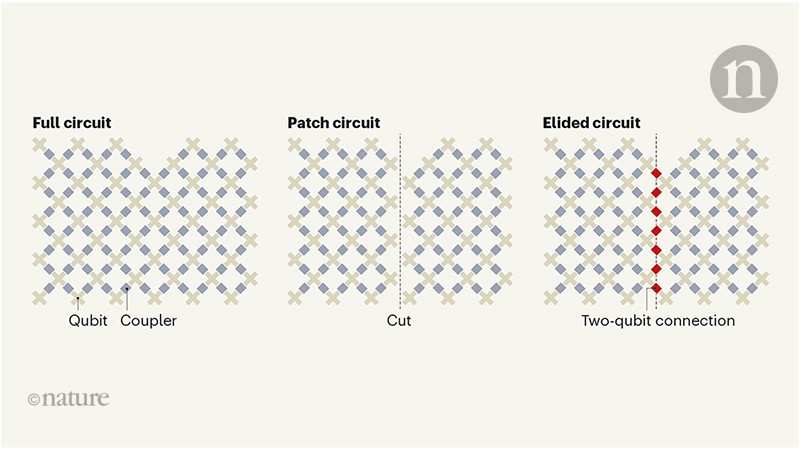

Verifying quantum supremacy for the sampling problem is challenging, because this is precisely the regime in which classical simulations are infeasible. To address this issue, Arute et al. first carried out experiments in a classically verifiable regime using three different circuits: the full circuit, the patch circuit and the elided circuit (Fig. 1). The full circuit used all n qubits and was the hardest to simulate. The patch circuit cut the full circuit into two patches that each had about n/2 qubits and were individually much easier to simulate. Finally, the elided circuit made limited two-qubit connections between the two patches, resulting in a level of computational difficulty that is intermediate between those of the full circuit and the patch circuit.

Figure 1 | Three types of quantum circuit. Arute et al.2 demonstrate that a quantum processor containing 53 quantum bits (qubits) and 86 couplers (links between qubits) can complete a specific task much faster than an ordinary computer can simulate the same task. Their demonstration is based on three quantum circuits: the full circuit, the patch circuit and the elided circuit. The full circuit comprises all 53 qubits and is the hardest to simulate on an ordinary computer. The patch circuit cuts the full circuit into two patches that are each relatively easy to simulate. Finally, the elided circuit links these two patches using a reduced number of two-qubit operations along reintroduced two-qubit connections and is intermediate between the full and patch circuits, in terms of its ease of simulation.

The authors selected a simplified set of two-qubit gates and a limited number of cycles (14) to produce full, patch and elided circuits that could be simulated in a reasonable amount of time. Crucially, the classical simulations for all three circuits yielded consistent XEB fidelities for up to n = 53 qubits, providing evidence that the patch and elided circuits serve as good proxies for the full circuit. The simulations of the full circuit also matched calculations that were based solely on the individual fidelities of the single-qubit and two-qubit gates. This finding indicates that errors remain well described by a simple, localized model, even as the number of qubits and operations increases.

Arute and colleagues’ longest, directly verifiable measurement was performed on the full circuit (containing 53 qubits) over 14 cycles. The quantum processor took one million samples in 200 seconds to reach an XEB fidelity of 0.8% (with a sensitivity limit of roughly 0.1% owing to the sampling statistics). By comparison, performing the sampling task at 0.8% fidelity on a classical computer (containing about one million processor cores) took 130 seconds, and a precise classical verification (100% fidelity) took 5 hours. Given the immense disparity in physical resources, these results already show a clear advantage of quantum hardware over its classical counterpart.

The authors then extended the circuits into the not-directly-verifiable supremacy regime. They used a broader set of two-qubit gates to spread entanglement more widely across the full 53-qubit processor and increased the number of cycles from 14 to 20. The full circuit could not be simulated or directly verified in a reasonable amount of time, so Arute et al. simply archived these quantum data for future reference — in case extremely efficient classical algorithms are one day discovered that would enable verification. However, the patch-circuit, elided-circuit and calculated XEB fidelities all remained in agreement. When 53 qubits were operating over 20 cycles, the XEB fidelity calculated using these proxies remained greater than 0.1%. Sycamore sampled the solutions in a mere 200 seconds, whereas classical sampling at 0.1% fidelity would take 10,000 years, and full verification would take several million years.

This demonstration of quantum supremacy over today’s leading classical algorithms on the world’s fastest supercomputers is truly a remarkable achievement and a milestone for quantum computing. It experimentally suggests that quantum computers represent a model of computing that is fundamentally different from that of classical computers4. It also further combats criticisms5,6 about the controllability and viability of quantum computation in an extraordinarily large computational space (containing at least the 253 states used here).

However, much work is needed before quantum computers become a practical reality. In particular, algorithms will have to be developed that can be commercialized and operate on the noisy (error-prone) intermediate-scale quantum processors that will be available in the near term1. And researchers will need to demonstrate robust protocols for quantum error correction that will enable sustained, fault-tolerant operation in the longer term.

Arute and colleagues’ demonstration is in many ways reminiscent of the Wright brothers’ first flights. Their aeroplane, the Wright Flyer, wasn’t the first airborne vehicle to fly, and it didn’t solve any pressing transport problem. Nor did it herald the widespread adoption of planes or mark the beginning of the end for other modes of transport. Instead, the event is remembered for having shown a new operational regime — the self-propelled flight of an aircraft that was heavier than air. It is what the event represented, rather than what it practically accomplished, that was paramount. And so it is with this first report of quantum computational supremacy.

It seems like a big milestone, but on the other hand it also seems like a not really programmed piece of code excution but rather a measurment of some properties of the system itself. Surely this will become handy for theoretical physics and might be nice starting point. The analogy with the wright brothers seems to fit.

To end in the words of the google article

"As a result of these developments, quantum computing is transitioning from a research topic to a technology that unlocks new computational capabilities. We are only one creative algorithm away from valuable near-term applications."

*claim by google IBM says something about days