Trenki said:

I didn't look at the sources but I suppose you should get a straight line in the graph. I suppose its a problem in your timing code! Maybe the timer you use does not have enough resolution.

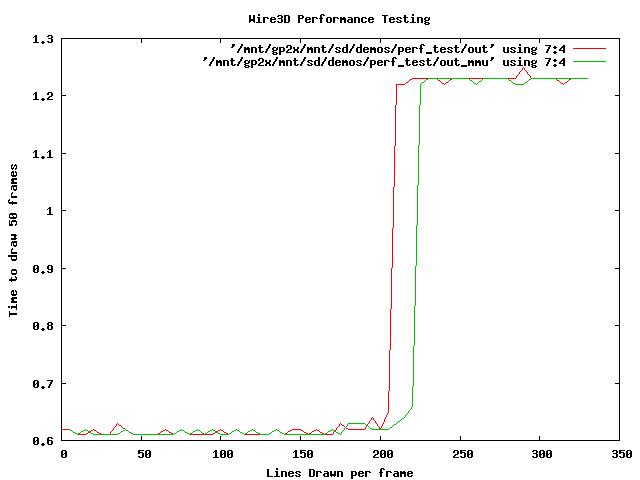

I thought it might be something like that so I made a version that measures clock ticks instead of seconds ((float)(clock() - start) / (float)CLOCKS_PER_SEC)) and I got the same shape graph.

Then I actually eyeballed the data and I found something a little surprising:

CODE

50 frames : 610000 ticks : 20 lines : 81.967216 fps

50 frames : 620000 ticks : 40 lines : 80.645164 fps

50 frames : 610000 ticks : 60 lines : 81.967216 fps

50 frames : 620000 ticks : 80 lines : 80.645164 fps

50 frames : 610000 ticks : 100 lines : 81.967216 fps

50 frames : 610000 ticks : 120 lines : 81.967216 fps

50 frames : 610000 ticks : 140 lines : 81.967216 fps

50 frames : 610000 ticks : 160 lines : 81.967216 fps

50 frames : 630000 ticks : 180 lines : 79.365082 fps

50 frames : 630000 ticks : 200 lines : 79.365082 fps

50 frames : 670000 ticks : 220 lines : 74.626869 fps

50 frames : 1230000 ticks : 240 lines : 40.650406 fps

50 frames : 1230000 ticks : 260 lines : 40.650406 fps

50 frames : 1230000 ticks : 280 lines : 40.650406 fps

So it seems like the measurements are in 10,000 tick chunks which is weird but if we divide everything by 10,000 we still see that it is accurate enough since it noodles around the 600-650K mark then jumps to double that. What I'm saying is that if it can tell the difference between 610K and 630K then the difference between 600K and 1200K is still significant.